AI security and development: A developer’s 2025 guide

The dual edge of AI: Innovation and the imperative of security

AI is rapidly transforming industries, unlocking unprecedented innovation and efficiency. Yet, with this immense power comes a critical responsibility: ensuring the security of these intelligent systems. For developers and cybersecurity professionals, navigating the dual challenge of rapid AI innovation and robust security is paramount.

AI security and development refers to the comprehensive process of embedding proactive security measures throughout the entire AI development lifecycle while simultaneously leveraging AI to enhance cyber defense capabilities. If you’ve ever deployed an AI model, you know the pressure to innovate quickly, but also the hidden complexities of securing your creation.

As you stand at the forefront of AI innovation, you’re also facing unprecedented security challenges. New attack vectors and complex vulnerabilities demand a proactive approach. This guide from Konvergense bridges the gap between AI innovation and robust security, offering practical frameworks to ‘shift security left’ in your AI/ML pipelines. With Konvergense’s 10+ years industry experience in digital transformation and AI solutions, we understand these challenges intimately.

In this guide, you will learn how to build resilient AI systems, secure generative AI, and harness AI for real-time cyber defense. Our aim is to equip you with the knowledge to not just build powerful AI, but secure it from the ground up.

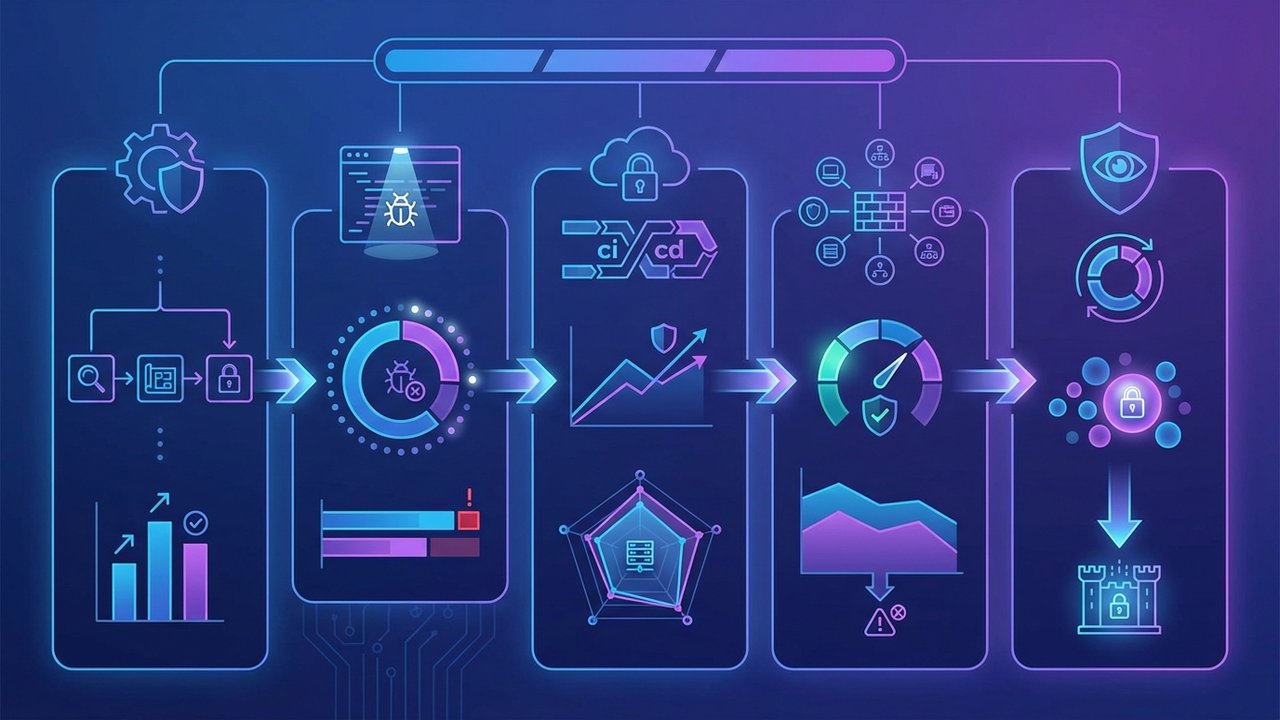

Shifting security left: Embedding protection throughout the AI lifecycle

For AI systems, ‘shift left’ security is critical because proactively addressing security from the design phase significantly reduces costs and mitigates risks long before deployment. Think of building a house: it’s much cheaper and safer to fix a foundation issue during planning than after the roof is on and the walls are up. This analogy perfectly illustrates why integrating security early in the AI development lifecycle is non-negotiable.

Traditional software development has embraced “shift left” principles, moving security considerations earlier into the development process. For AI/ML systems, this philosophy is even more vital due to their unique complexities, such as data dependencies, model opacity, and dynamic environments. By embedding security at every stage, you can proactively defend against unique AI threats like data poisoning, model evasion, and prompt injection.

Customizing the secure software development framework (SSDF) for AI/ML

The Secure Software Development Framework (SSDF) provides a structured approach to building secure software. It can be customized for specific AI use cases by mapping its four core practices—Prepare, Protect, Produce, and Respond—to each stage of the AI development lifecycle, from data ingestion to model deployment.

The SSDF principles offer a robust foundation:

- Prepare the Organization: Establish security roles, training, and a secure development environment.

- Protect the Software: Safeguard all software components from tampering and unauthorized access.

- Produce Well-Secured Software: Integrate security activities throughout the development process.

- Respond to Vulnerabilities: Create plans to identify, triage, and remediate vulnerabilities effectively.

Applying SSDF to AI/ML means translating these phases to your data acquisition, model training, pipeline development, and deployment stages. For instance, in the ‘Prepare’ stage, you’d define AI-specific threat models focusing on data poisoning, model evasion, or prompt injection. During ‘Protect,’ you’d implement secure data handling for training sets, including anonymization and access controls, and secure model registries.

Key considerations for AI-specific threat modeling include identifying unique attack surfaces. These can range from training data integrity and model parameters to inference endpoints and API access. For example, threat modeling for a recommendation engine might involve asking:

- Could malicious user input poison the training data, leading to biased recommendations?

- Could an attacker manipulate model parameters to push specific products?

- Are inference requests vulnerable to prompt injection, causing the model to reveal sensitive logic?

This proactive approach ensures security is embedded, not bolted on, addressing unique AI risks.

Further Reading: Learn more about SSDF from the experts at the National Institute of Standards and Technology (NIST). Their NIST Special Publication 800-218 provides comprehensive guidance on SSDF.

Secure coding and pipeline practices for AI systems

Secure coding standards for AI-generated code are becoming increasingly important. As AI-assisted coding tools become commonplace, it’s crucial to apply best practices for reviewing and hardening this code. This includes rigorous input validation, diligent dependency management, and adherence to established secure coding guidelines.

Securing ML pipelines involves protecting every stage. This means safeguarding data ingress and egress points, implementing robust model versioning, enforcing strict access controls, and isolating development and production environments. For example, when building secure AI systems that handle sensitive financial data, such as those used in banking AI in the GCC, secure data handling and strict access controls are paramount. Learn more about AI in banking and financial services automation here.

Continuous monitoring and testing are vital for AI systems. Implement automated security testing tools like Static Application Security Testing (SAST) for code analysis, Dynamic Application Security Testing (DAST) for runtime vulnerabilities, and Interactive Application Security Testing (IAST) tailored for AI components. These tools help identify weaknesses that might emerge as models evolve or data changes.

Establishing a common vocabulary is also crucial. Bridging the communication gap between AI engineers, data scientists, and security teams ensures everyone understands the security implications of their work. Regular cross-functional training sessions can foster this shared understanding.

Data security and privacy in AI development

Protecting sensitive training data is a cornerstone of AI security. Strategies like anonymization, differential privacy, and secure storage solutions are essential. Anonymization removes personally identifiable information, while differential privacy adds noise to data to protect individual records without compromising the dataset’s utility for model training.

Implementing least privilege for data access across the AI team is a best practice for access control. This means granting users only the minimum access necessary to perform their tasks, reducing the risk of unauthorized data exposure. Regularly review and update access policies.

Data governance and compliance are critical, ensuring adherence to regulations like GDPR and CCPA within AI workflows. Establish clear policies for data collection, usage, storage, and deletion, and conduct regular audits to verify compliance. This proactive approach helps avoid costly legal and reputational damages.

Preventing data leakage is another key concern. Strategies to minimize exposure during model development and deployment include:

- Data Masking: Obscuring specific data points to protect sensitive information during development.

- Secure Sandboxes: Using isolated environments for model experimentation.

- Output Filtering: Ensuring model outputs do not inadvertently reveal sensitive training data.

Navigating the new frontier: Securing generative AI and foundation models

Generative AI introduces a unique attack surface, presenting novel vulnerabilities that differ significantly from traditional software. Unlike deterministic systems, generative models can produce varied outputs based on complex, often opaque internal states. This inherent flexibility, while powerful, creates new avenues for malicious exploitation.

The “dual-use” challenge is particularly pertinent: foundation models can be used for both beneficial and malicious purposes. A large language model (LLM) capable of writing compelling marketing copy can also be leveraged for sophisticated phishing scams or to generate disinformation. This inherent versatility makes securing them a complex and evolving task.

These risks are critical because the rapid evolution of attack vectors requires specialized attention for cutting-edge AI. As generative AI becomes more integrated into business applications—from enhancing marketing campaigns to customer service—securing these tools is paramount. For instance, when leveraging AI-powered marketing on a budget, ensuring the security of those AI tools is as important as their efficacy.

Understanding and defending against novel attacks

Generative AI models are susceptible to several unique attack types:

- Prompt Injection: Malicious prompts can hijack LLM behavior, overriding safety mechanisms or extracting sensitive information.

- Defense techniques: Input validation and sanitization, privilege separation (limiting what an LLM can access or do), and AI firewalls that filter or rewrite suspicious prompts.

- Data Poisoning: Manipulating training data to compromise model integrity, introduce backdoors, or inject biases.

- Strategies: Robust data sanitization, secure data provenance (tracking data origin), and continuous validation of training datasets.

- Model Inversion Attacks: Reconstructing sensitive training data from model outputs.

- Mitigation: Differential privacy during training, output filtering, and careful design of model APIs to limit information leakage.

- Membership Inference Attacks: Determining if specific data points were part of the training set, posing privacy risks.

- Techniques: Privacy-preserving training methods and careful control over model access and output.

Securing large language models (LLMs) and autonomous agents

API security for LLMs is crucial, requiring robust authentication, authorization, and rate limiting. Implementing secure API gateways helps manage access and protect the models from abuse. Treat LLM APIs with the same rigor as any critical business API.

For autonomous agent frameworks, best practices include securing agent design, tool access, and decision-making processes. Agents often have access to external tools and systems, making their security paramount. Implement strict access policies for tools and ensure agent decisions are auditable and reversible.

Preventing data leakage and unauthorized access involves implementing robust access controls and data isolation in generative AI systems. This might mean segregating different LLM instances or ensuring sensitive data never enters the generative model’s context window.

Verifying model integrity is essential for detecting manipulation, bias, and ensuring consistent, trustworthy outputs. Techniques include cryptographic attestation of models, continuous monitoring of model performance, and regular audits of training data and pipelines.

Ethical AI and responsible deployment

Addressing bias and fairness is a critical aspect of securing generative AI. Ensure models are not perpetuating or amplifying harmful societal biases. Regularly audit models for fairness metrics and implement bias detection and mitigation strategies throughout the development lifecycle.

Transparency and explainability (XAI) are crucial for making AI decisions understandable and auditable. For security and ethical review, you need to understand why an AI model produced a particular output. Implement explainability techniques to gain insights into model behavior.

The challenge of “hallucinations” – where generative AI produces factually incorrect or misleading information – must be mitigated. Implement fact-checking mechanisms, provide clear disclaimers, and design systems that allow for human oversight and correction of outputs.

Establishing clear usage policies and governance for generative AI tools within your organization is vital. Define acceptable use, data handling protocols, and review processes to ensure responsible deployment and minimize risks.

Further Reading: The Cybersecurity and Infrastructure Security Agency (CISA) provides valuable insights into emerging cyber threats, including those involving AI. Check their official publications for the latest advisories.

Leveraging AI for advanced cyber defense and incident response

The paradox of AI is that while it presents new attack vectors, it also serves as a powerful weapon in the cybersecurity arsenal. For those focused on ‘AI power cyber security,’ harnessing AI proactively for defense is a strategic imperative. AI can significantly augment human defenders, offering speed, scale, and pattern recognition capabilities far beyond human limitations.

AI-driven solutions can process vast amounts of data, identify subtle anomalies, and respond to threats at machine speed. This capability is crucial in a landscape where human analysts are often overwhelmed by the volume and sophistication of cyberattacks. By demonstrating how to use AI proactively for defense, we empower you to build more resilient systems. Having 10+ years of experience in this space, we’ve seen first-hand how AI tools can revolutionize threat detection and incident response.

AI-specific security tools for threat intelligence and anomaly detection

AI-powered threat intelligence platforms analyze vast datasets—including dark web forums, vulnerability databases, and attack reports—to identify emerging threats, attack patterns, and vulnerabilities specific to AI systems. They can predict future attack vectors and provide actionable insights.

Behavioral analytics for anomaly detection uses machine learning to baseline normal AI system behavior. This includes typical model access patterns, data flows, and inference patterns. Any deviation from this baseline can be flagged as a potential security incident, such as unusual model queries or unexpected data exports.

Automated vulnerability scanning is enhanced by AI, which can identify weaknesses in AI models, data pipelines, and related infrastructure with greater accuracy and speed. These tools can analyze code, configurations, and even model weights for potential security flaws.

Real-time monitoring is critical, with AI-driven Security Information and Event Management (SIEM) and Extended Detection and Response (XDR) solutions. These platforms can correlate security events across AI environments, providing a holistic view of potential threats and enabling rapid response.

Developing robust AI incident response strategies

Tailoring incident response for AI systems is essential due to the unique challenges involved. Detecting, containing, eradicating, and recovering from AI breaches—such as model tampering or data poisoning—requires specialized playbooks. Unlike traditional software incidents, AI breaches might manifest as subtle performance degradation or biased outputs, making detection more complex.

AI assistance can significantly accelerate detection and response, helping achieve target Mean Time To Detect (MTTD) and Mean Time To Respond (MTTR). AI can analyze logs, network traffic, and model behavior for anomalies, alerting human teams to potential threats faster. Automated response actions, guided by AI, can contain breaches by isolating compromised models or data pipelines.

Playbooks for AI incidents should be specific. For example, a prompt injection response playbook might involve immediate input filtering, rollback to a trusted model version, and an investigation into the source of the malicious prompt. A data poisoning playbook would focus on identifying corrupted data, retraining models, and enhancing data provenance.

Forensics in AI environments involve collecting and analyzing logs, model versions, and data snapshots. This helps understand the scope of a breach, identify the attack vector, and ensure complete eradication of the threat.

Further Reading: Leaders in AI-powered security solutions like Palo Alto Networks and Fortinet offer comprehensive resources and reports on leveraging AI for cyber defense. Their research provides deep insights into the practical applications of AI in security. For insights into AI’s role in financial security and fraud detection, you can also refer to Konvergense’s article on banking AI in the GCC.

Defending against new attack vectors targeting AI tools

Supply chain attacks on AI components are a growing concern. Securing open-source models, libraries, and pre-trained components means verifying their integrity, scanning for vulnerabilities, and maintaining a secure repository of approved components. Trusting external components blindly is a significant risk.

Adversarial AI attacks involve techniques designed to fool AI models. Defending against these includes adversarial training (exposing models to adversarial examples during training), input sanitization, and robust feature engineering. It’s about making your models more resilient to subtle manipulations.

Securing AI development environments is paramount. Protecting Integrated Development Environments (IDEs), code repositories, and collaboration tools used by AI teams from unauthorized access or malicious code injection prevents compromise at the source. Implement strong authentication and access controls.

Educating AI developers is a continuous process. Training on secure coding practices, threat awareness (especially unique AI threats), and responsible AI development ensures that security is baked into the culture. This human element is often the strongest defense.

Building a resilient AI security posture: Best practices and frameworks

Building a resilient AI security posture is not a one-time task; it’s a continuous journey. It involves integrating security into your organizational culture and processes, choosing the right frameworks and tools for your specific AI initiatives, and fostering a mindset of continuous improvement. With Konvergense’s 10+ years industry experience and our team of Certified X security professionals, we guide organizations through this complex landscape.

Organizations must recognize that the AI threat landscape is constantly evolving. What is secure today might be vulnerable tomorrow. Therefore, adaptability and proactive learning are key to maintaining a robust defense.